|

I am a Ph.D. student in computer science at Columbia University, advised by Prof. Yunzhu Li. Prior to this, I obtained my Bachelor's degree from Tsinghua University (Yao Class). I am fortunate to receive mentorship from Prof. Kris Hauser during my Ph.D. study, and Prof. Xiaolong Wang, Prof. Yang Gao, Prof. Li Yi during my undergrad. My research sits at the intersection of robotics, 3D vision, physics simulation, and machine learning. I am interested in bridging the gap between robotic simulation and the real world for robust and scalable robot manipulation. If you'd like to discuss research opportunity, collaboration, Ph.D. application, or anything related, feel free to reach out via email: kaifeng dot z at columbia dot edu. Google Scholar / Github / Twitter / LinkedIn / CV |

Photo credit: Bingjie Tang |

|

* indicates equal contribution. Representative papers are highlighted. |

|

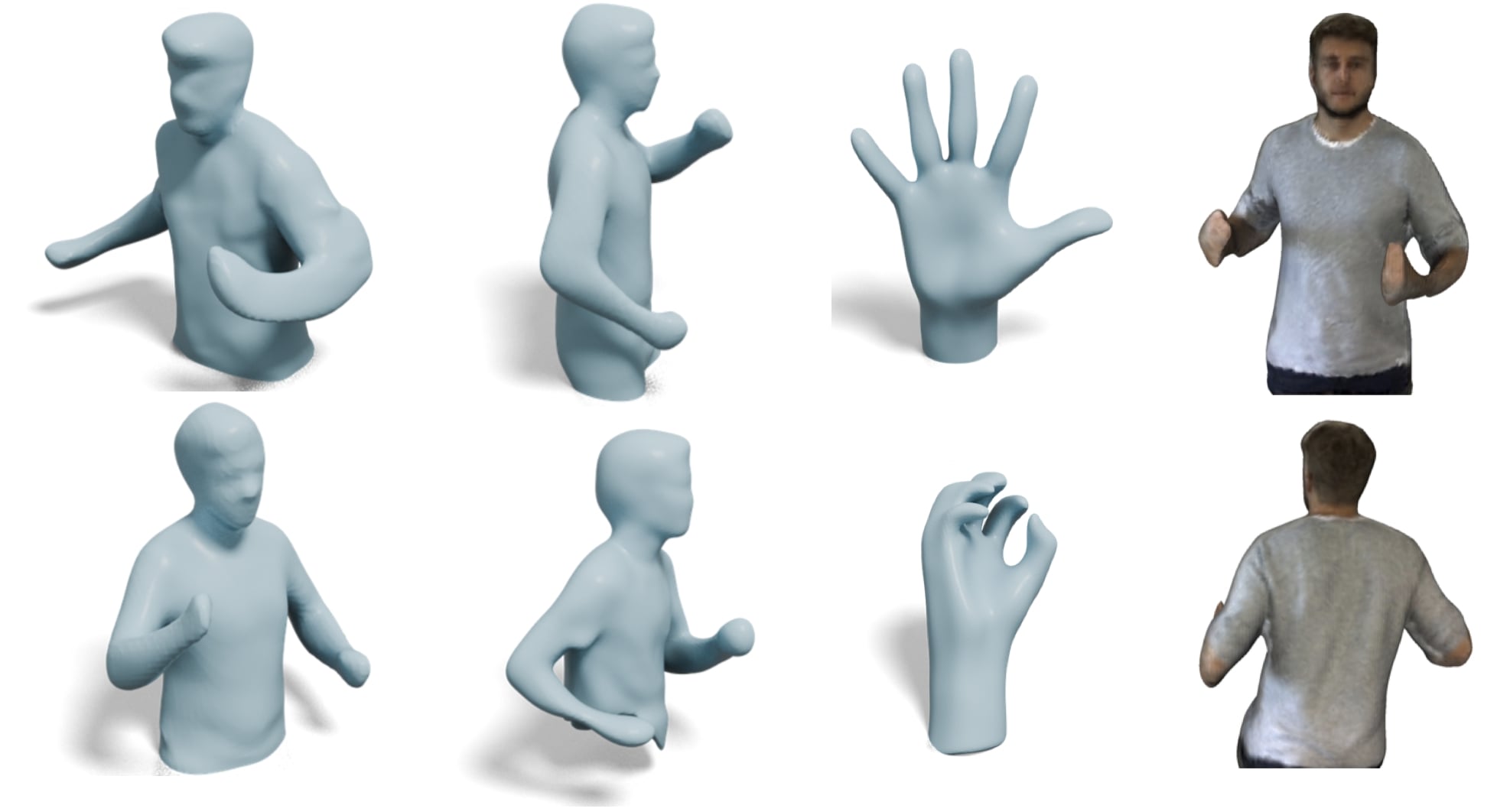

Kaifeng Zhang*, Shuo Sha*, Hanxiao Jiang, Matthew Loper, Hyunjong Song, Guangyan Cai, Zhuo Xu, Xiaochen Hu, Changxi Zheng, Yunzhu Li arXiv, 2025 website / arXiv / pdf / code We propose a framework for robot policy evaluation in simulation environments, using Gaussian Splatting for rendering and soft-body digital twin for dynamics. |

|

Heng Zhang*, Gehan Zheng*, Kaifeng Zhang, Hyunjong Song, Shivansh Patel, Xiaochen Hu, Yunzhu Li, Changxi Zheng, Peter Yichen Chen IROS 2025 RoDGE Workshop We present an interactive digital twin construction (real-to-sim) framework that learns the full dynamics of elastoplastic articulated objects from videos. |

|

Hanxiao Jiang, Hao-Yu Hsu, Kaifeng Zhang, Hsin-Ni Yu, Shenlong Wang, Yunzhu Li International Conference on Computer Vision (ICCV), 2025 website / arXiv / pdf / code We optimize a spring-mass physics model of deformable objects and integrate the model with 3D Gaussian Splatting for real-time re-simulation with rendering. |

|

Kaifeng Zhang, Baoyu Li, Kris Hauser, Yunzhu Li Robotics: Science and Systems (RSS), 2025 website / arXiv / pdf / code / demo We propose a neural particle-grid model for training dynamics model with real-world sparse-view RGB-D videos, enabling high-quality future prediction and rendering. |

|

Mingtong Zhang*, Kaifeng Zhang*, Yunzhu Li Conference on Robot Learning (CoRL), 2024 website / arXiv / pdf / code / demo We learn neural dynamics models of objects from real perception data and combine the learned model with 3D Gaussian Splatting for action-conditioned predictive rendering. |

|

Kaifeng Zhang*, Baoyu Li*, Kris Hauser, Yunzhu Li Robotics: Science and Systems (RSS), 2024 ICRA 2024 RMDO Workshop (Best Abstract Award) website / arXiv / pdf / code We learn a material-conditioned neural dynamics model using graph neural network to enable predictive modeling of diverse real-world objects and achieve efficient manipulation via model-based planning. |

|

Xiaoyan Cong, Haitao Yang, Liyan Chen, Kaifeng Zhang, Li Yi, Chandrajit Bajaj, Qixing Huang arXiv, 2024 We achieve 4D neural implicit reconstruction from only a single-view scan using deformation and topology regularizations. |

|

Kaifeng Zhang, Yang Fu, Shubhankar Borse, Hong Cai, Fatih Porikli, Xiaolong Wang International Conference on Learning Representations (ICLR), 2023 website / arXiv / pdf / code We propose a fully self-supervised method for category-level 6D object pose estimation by learning dense 2D-3D geometric correspondences. Our method can train on image collections without any 3D annotations. |

|

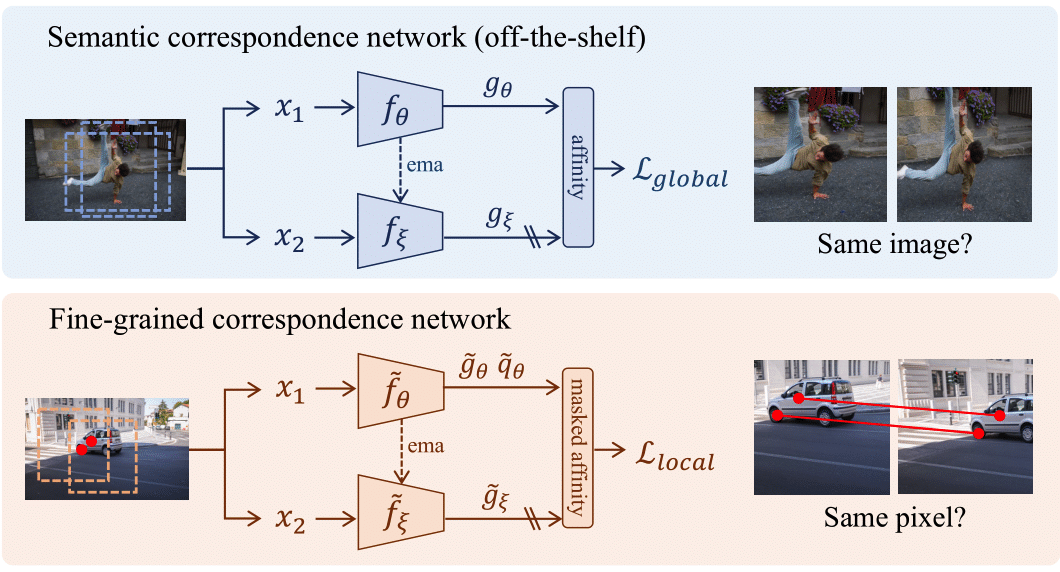

Yingdong Hu, Renhao Wang, Kaifeng Zhang, Yang Gao European Conference on Computer Vision (ECCV), 2022 (Oral) arXiv / pdf / code We show that fusing fine-grained features learned with low-level contrastive objectives and semantic features from image-level objectives can improve SSL pretraining. |

|

Template borrowed from Jon Barron |