Abstract

While 6D object pose estimation has wide applications across computer vision and robotics, it remains far from being solved due to the lack of annotations. The problem becomes even more challenging when moving to category-level 6D pose, which requires generalization to unseen instances. Current approaches are restricted by leveraging annotations from simulation or collected from humans. In this paper, we overcome this barrier by introducing a self-supervised learning approach trained directly on large-scale real-world object videos for category-level 6D pose estimation in the wild. Our framework reconstructs the canonical 3D shape of an object category and learns dense correspondences between input images and the canonical shape via surface embedding. For training, we propose novel geometrical cycle-consistency losses which construct cycles across 2D-3D spaces, across different instances and different time steps. The learned correspondence can be applied for 6D pose estimation and other downstream tasks such as keypoint transfer. Surprisingly, our method, without any human annotations or simulators, can achieve on-par or even better performance than previous supervised or semi-supervised methods on in-the-wild images.

Video

Method Overview

Given the input image and a categorical canonical mesh learned by our model, we extract image per-pixel and mesh per-vertex embeddings, and establish dense geometric correspondences via feature similarity in the embedding space.

Cycle Consistency

Given the input image and a categorical canonical mesh learned by our model, we extract image per-pixel and mesh per-vertex embeddings, and establish dense geometric correspondences via feature similarity in the embedding space.

We propose novel losses that establish cycle consistency across 2D and 3D space. The instance cycle consistency loss encourages the consistency between correspondence and an estimated camera projection. The cross-instance and cross-time cycle consistency loss goes beyond a single image-mesh pair to cross-instance and cross-time images, encouraging category-level semantic consistency.

Results

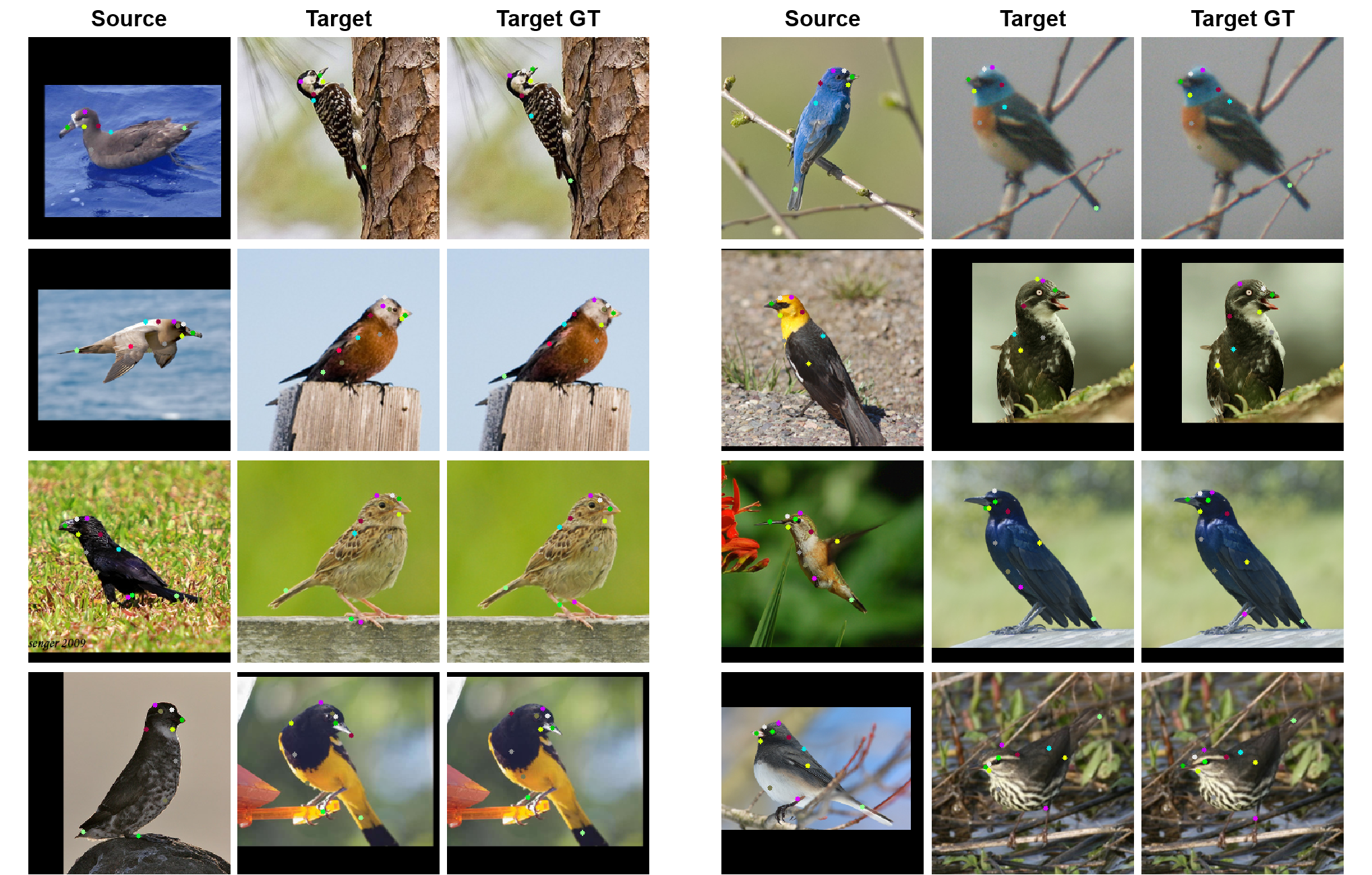

We evaluate our method on the Wild6D, NOCS-REAL275 and CUB-200-2011. On Wild6D and REAL275, we visualize the correspondence and pose estimation results. The model is trained on Wild6D training set which contains no 3D annotations, and tested on Wild6D test set and REAL275 test set by zero-shot transfer. On CUB-200-2011, we visualize the correspondence and keypoint transfer results.

Wild6D

REAL275

CUB-200-2011

BibTeX

@article{zhang2022self,

author = {Zhang, Kaifeng and Fu, Yang and Borse, Shubhankar and Cai, Hong and Porikli, Fatih and Wang, Xiaolong},

title = {Self-Supervised Geometric Correspondence for Category-Level 6D Object Pose Estimation in the Wild},

journal = {arXiv preprint arXiv:2210.07199},

year = {2022},

}